Jun 25, 2023

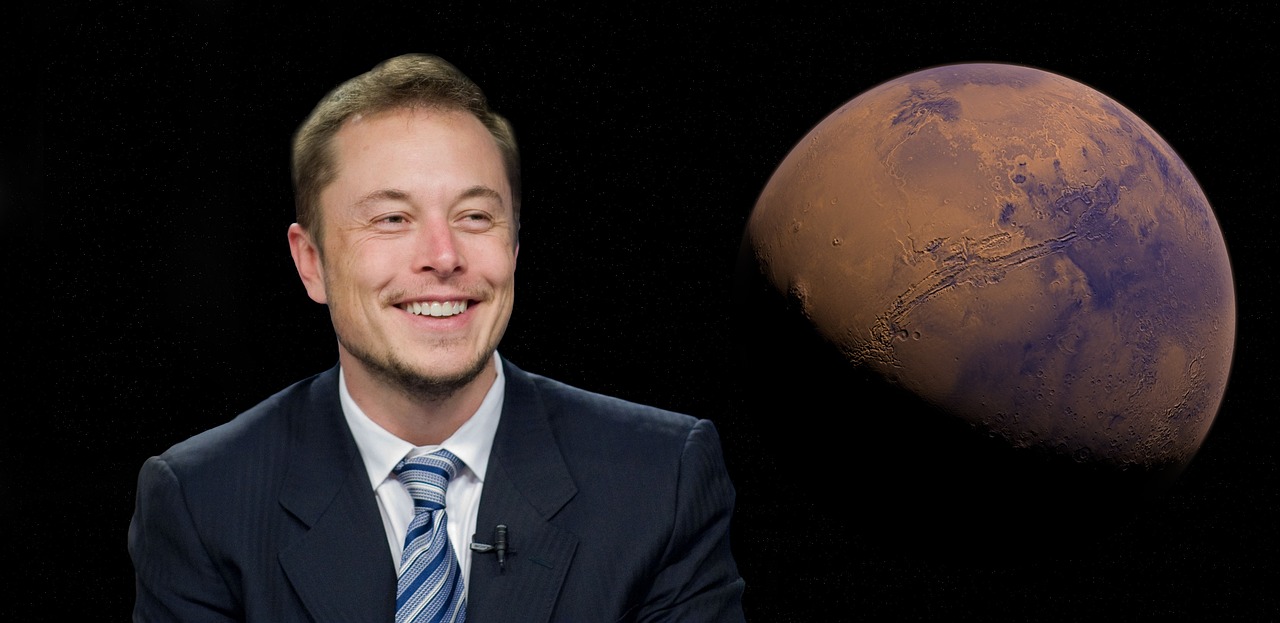

Elon Musk: Australia threatens to fine Twitter over online hate

Twitter, a social media platform owned by means of Elon Musk, is going through improved scrutiny in Australia over its handling of online hate. Despite its smaller user base in comparison to other structures, Twitter has ended up the difficulty of the highest wide variety of complaints in the USA. Australia's online protection commissioner has issued a felony note, demanding evidence from Twitter within 28 days or the opportunity of sizeable fines. This weblog delves into the evolving scenario, discussing the demands placed on Twitter, the particular challenges it faces under Musk's ownership, and the ability to impact the platform's content moderation practices.

Under Elon Musk's ownership, Twitter has pledged to protect loose speech but has faced grievances for its management of hate speech and extremist content material. The online safety commissioner in Australia has highlighted the concerning range of complaints associated with online hate directed at Twitter. The organization's reaction to those complaints and its ability to efficiently moderate its platform is now being puzzled. This weblog examines the implications of those demands on Twitter's operations, its popularity, and the broader discussion surrounding online safety and regulation.

The Rise of Complaints and Regulatory Action:

Twitter's dealing with online hate has come below scrutiny in Australia, with Julie Inman Grant, the web protection commissioner, revealing that a wide variety of complaints in the u. S . A . Are directed at the platform. Grant expressed the problem that Twitter appears to have neglected its duty in addressing hate speech efficaciously. This alarming revelation precipitated the regulator to trouble a criminal note annoying an explanation from the social media large. The commissioner additionally highlighted reviews of formerly banned money owed being reinstated, which has emboldened extremist individuals and corporations, which includes neo-Nazis, each inside Australia and across the world. This demand for responsibility is part of the regulator's ongoing efforts to make certain social media organizations take obligation for their content material moderation practices and create safer online environments for customers.

The revelations surrounding Twitter's dealing with online hate in Australia raise crucial questions about the platform's dedication to combating hate speech and extremism. As one-third of all court cases regarding online hate in the united states are directed at Twitter, it's miles clear that there are vast concerns regarding the platform's effectiveness in tackling this issue. Grant's emphasis on the reinstatement of banned debts and the following impact on extremist companies underscores the potential effects of insufficient content moderation. This demand for proof serves as a strong message to social media groups approximately the importance of addressing hate speech and taking proactive measures to create a more secure online space for customers.

Twitter's Accountability and Leadership Changes:

Under Elon Musk's ownership, Twitter has confronted increasing scrutiny concerning its dedication to defensive unfastened speech even in combatting online hate. The company has skilled big personnel modifications in its consideration and safety department. Ella Irwin, the second head of trust and safety throughout Musk's possession, resigned without public disclosure of the reasons in the back of her departure. Her exit took place rapidly after Musk publicly criticized a content moderation choice associated with misgendering. The departure of Irwin's predecessor, Yoel Roth, additionally befell rapidly after Musk's takeover. The head of consideration and safety plays a vital function in content material moderation, an area that has gained interest given Musk's involvement.

Australia's Demand for Transparency:

The call for transparency and duty from Twitter in reaction to online hate aligns with the developing international trend of retaining social media corporations liable for their structures. Julie Inman Grant, the web safety commissioner, highlights the urgency for Twitter to deal with the pervasive problem of online hate, particularly thinking about the platform's disproportionately high quantity of proceedings relative to its personal base. Grant's call for rationalization and potential penalties for non-compliance sends a strong message that social media organizations ought to prioritize the safety and nicely-being of their users by actively preventing hate speech and imposing powerful content moderation practices. This call for greater responsibility is part of a broader campaign to foster a safer digital environment and ensure that social media platforms play a proactive position in preventing harmful content material.

The regulator's efforts mirror the developing popularity of the impact and effect of social media on society, as well as the need for robust mechanisms to cope with online hate. By conserving structures like Twitter chargeable for their dealing with hate speech, regulators are striving to create a more secure online area in which users can interact without worry of harassment or discrimination. This push for transparency and responsibility represents a good-sized step in the direction of promoting accountable virtual citizenship and underscores the significance of social media businesses in actively combating online hate and protecting the properly-being of their customers.

Twitter's Transformation under Musk:

Since Elon Musk's acquisition of Twitter, sizeable changes have passed off within the company. Roughly 75% of its personnel, including abuse-tracking teams, were let pass, leading to concerns approximately the platform's capacity to correctly deal with online hate. Musk has additionally made changes to the enterprise's verification manner and confronted criticism for the impact on content moderation efforts. Advertisers have increasingly withdrawn from the platform, elevating questions about Twitter's capability to hold a sustainable business model amid these challenges. With Linda Yaccarino, formerly of NBCUniversal, assuming the role of CEO, the employer aims to navigate these issues and regain belief.

Conclusion:

Twitter's handling of online hate has come under scrutiny, prompting Australia's cyber watchdog to call for evidence. The social media platform's status as the most complained-approximately platform in the country increases concerns about its content moderation practices. Under Elon Musk's ownership, Twitter has experienced significant personnel changes and confronted grievances for its technique of tackling online hate. The needs for transparency and responsibility underscore the developing importance of social media organizations' obligation to cope with harmful content. As Twitter navigates those demanding situations and undergoes leadership adjustments, the final results will not simplest shape the platform's future but additionally have broader implications for the law of online hate speech and the position of social media in society.